Things to be tested:

For this session we focused on two areas: choosing specific buttons to incorporate into our device, and completing the code for the routine that would be transmitted to the Apple TV unit through IR signals.

Buttons:

We had 3 sets of buttons to test, all of which required varying degrees of pressure to activate : a pair of 3″ round, arcade-style buttons, a pair of 2″ round, arcade-style buttons and a pair of index-card-sized, flat, rectangular buttons which had pictures of the corresponding movies on their faces. The round buttons had large images of the movie selections on a board behind them.

Code:

We had asked the family to bring their AppleTv unit so the code our team had developed could be tested and polished with the intended device.

The Test:

When the family arrived, Benjamin definitely remembered our previous testing session and seemed eager and excited to be back. As soon as everyone seemed settled Willie and Daniel began work with the family’s Apple TV unit. Ming Min and I tested the button options with Benjamin. Once again we used a “Wizard of Oz” technique, where Benjamin was asked to select a movie using the buttons, and we would then play the movie on our laptops. Though an indicator light would signal whether the button press had been successful, there was no actual connection between the buttons and the movie player devices.

The largest buttons required the most pressure to activate. We requested that Ben try each button style multiple times. Out of many tries with each of the button sets, the only button set that yielded any result were the rectangular picture buttons. This successful activation occurred only once or twice.

Click to see Ben Using Rectangular Picture Buttons

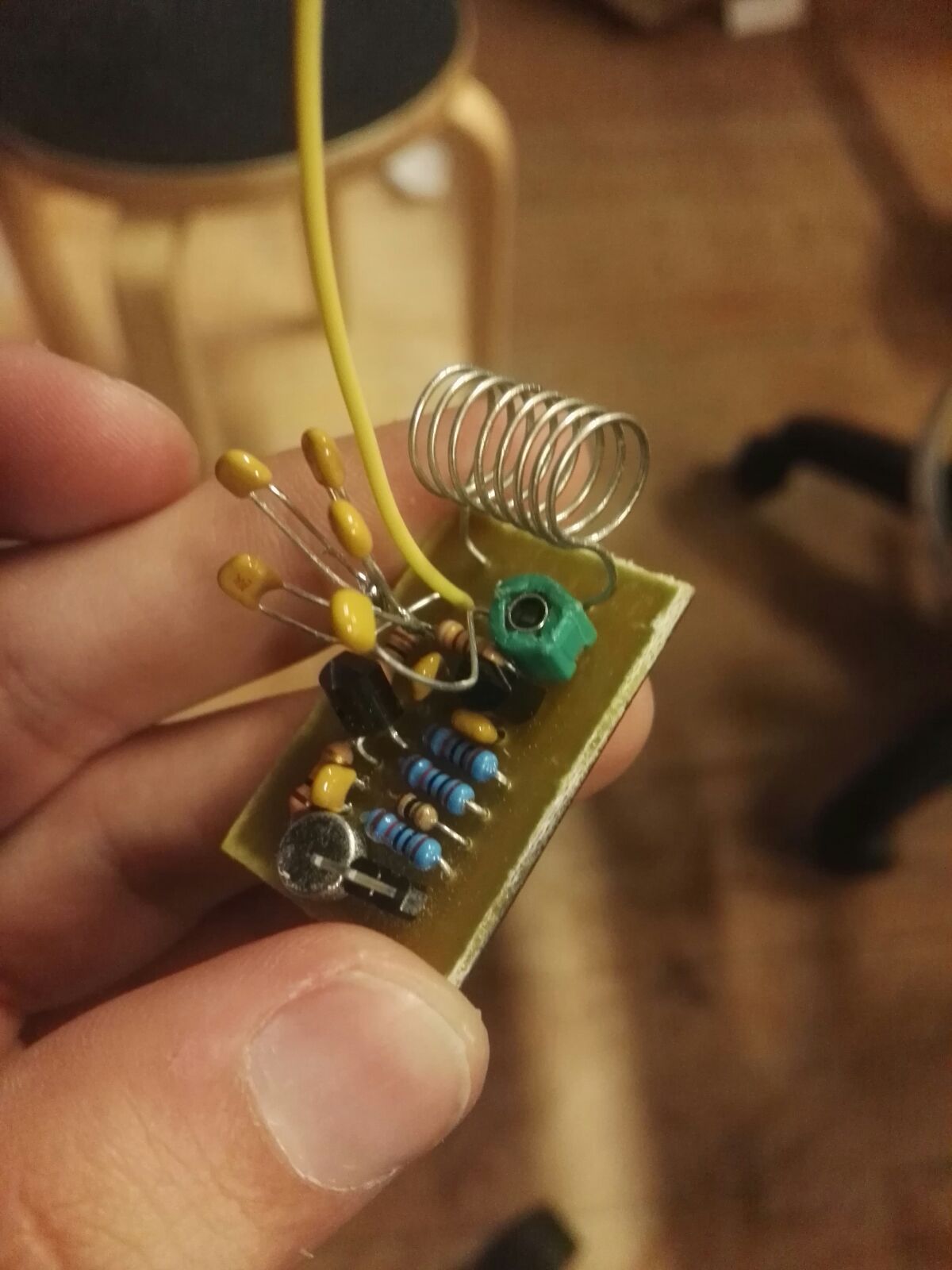

Seeing that Ben’s light touch was a problem, we quickly assembled a set of 3 capacitive touch buttons which we hoped Ben would activate by simply touching the image of the movie he wanted to watch. Unfortunately these also failed to register a signal at Ben’s touch.

Without the option of the very sensitive AbleNet buttons we used in the first test session, our path forward was limited. The rectangular picture buttons had the benefit of being visually similar to the iPad selection system Ben had been training with. Ben’s mom, felt that with some training, Ben could learn to apply more pressure and successfully activate the picture buttons.

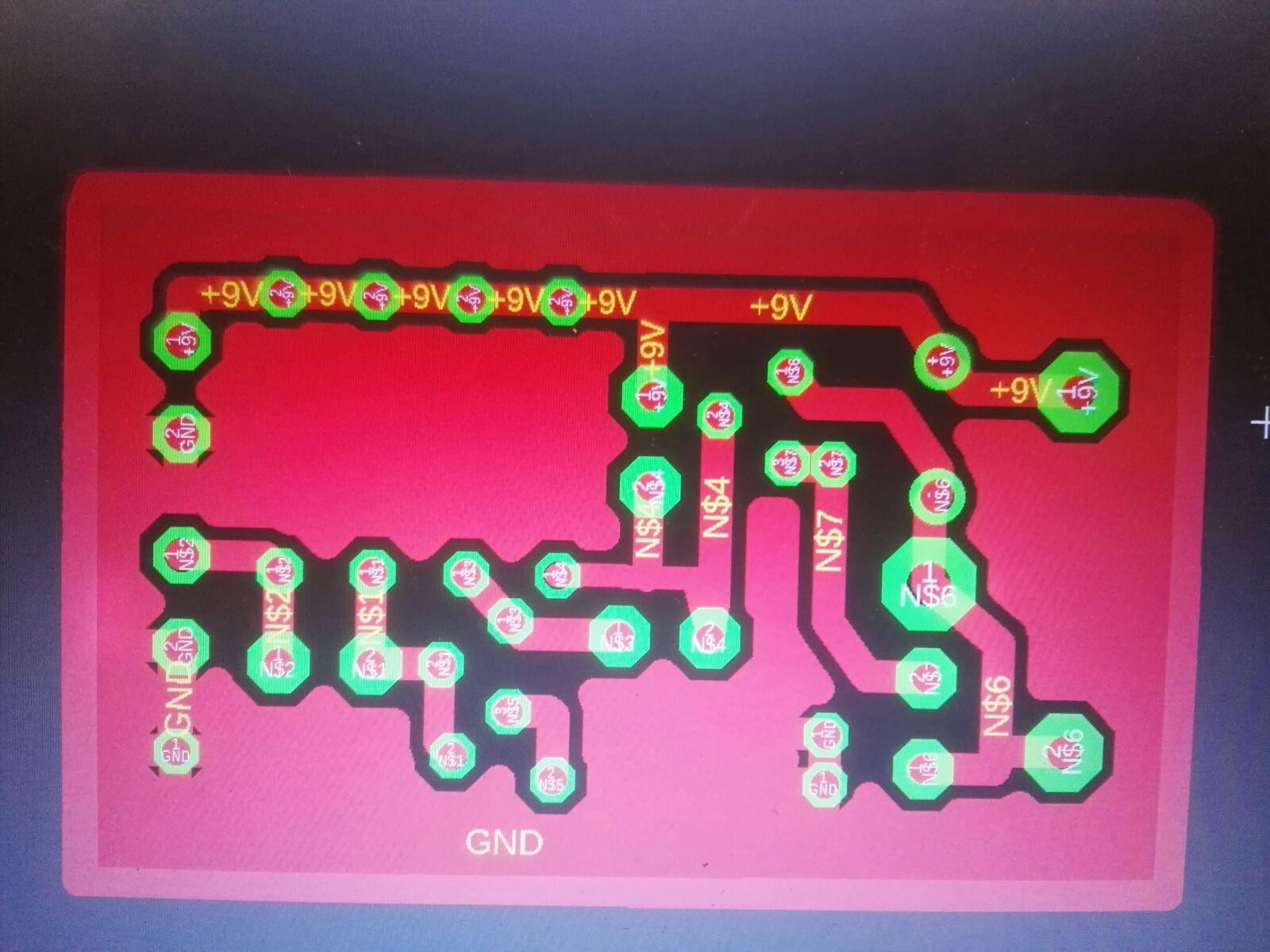

As the session concluded Willie and Daniel demonstrated the code on the family’s Apple TV. The many steps required to access the desired movie through their IR remote system made the process much slower than we hoped, but the code worked!

Team Member Conclusions:

WILLIE

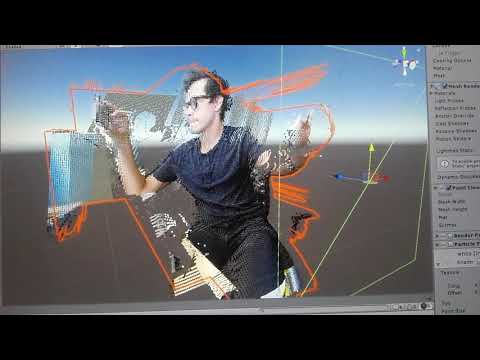

Our controller solution involves sending a list of commands to navigate automatically to a movie after Ben presses a corresponding button. This is challenging for a few major reasons: First, there are a whole lot of screens! A single movie alone requires at least four buttons to start after it has been selected. Just getting to that movie requires multiple arrow keys and select buttons. The sequence of buttons changes depending on what screen the family left the AppleTV on. Second, in order to reduce button presses for most users, the AppleTV will keep track of its current state to speed up navigation. For example, if a user has recently been watching “Tangled” and then they navigate Home, the next time the user goes to Movies, it will skip most of the movie screens and directly navigate to playing “Tangled.” This is problematic for us because it means there is no constant direct route to a given movie. Third, the AppleTV only picks up a single input at once, and there is a certain amount of latency before it can accept another input. This means that our solution must wait a little while after each command is sent.

Our solution to the first problem simply involved lots of trial and error with the family’s AppleTV to produce the correct list of inputs to get to each movie. In response to the second problem, we figured out various methods of “reset” so that at the beginning the sequence moves the AppleTV to Home, and every time the input arrives at a new screen (Home, Movies, Purchased, etc.) it moves the cursor to the top left. (This is particularly challenging on the home screen because the same buttons have different results like triggering the screen saver depending on where the cursor is when the input is received.) Finally, solving the third problem required sending inputs as fast as possible most of time, but more slowly at key events to allow the AppleTV, e.g. switching between screensaver and home screen, and loading a movie. As a result, our solution appears fairly robust, but is very slow (over 40 seconds) to account for all the possible states and timing differences. It is unclear whether Ben will be able to wait long enough for the movie to start or learn that the process takes time.

MING MIN

To have a more organized preparation and setup prior to trial

The group focusing on getting the trials done with all the devices but appeared to be more environmental stimulating for Ben to focus on tasks compared to previous session

Good teamwork to get the solutions built up on the spot when the original plans not producing desired outcomes.

DANIEL

The time to get to the movie is too long and Ben may leave the room before gets to the movie.

He gets distracted of we have more displays around and he is very responsible to the sound.

Capacitive touch feels like a good tech if we find more sensitive hardware, because he is definitely familiar with the iPad so he touches slightly

12/4/18 Review Session with Professors

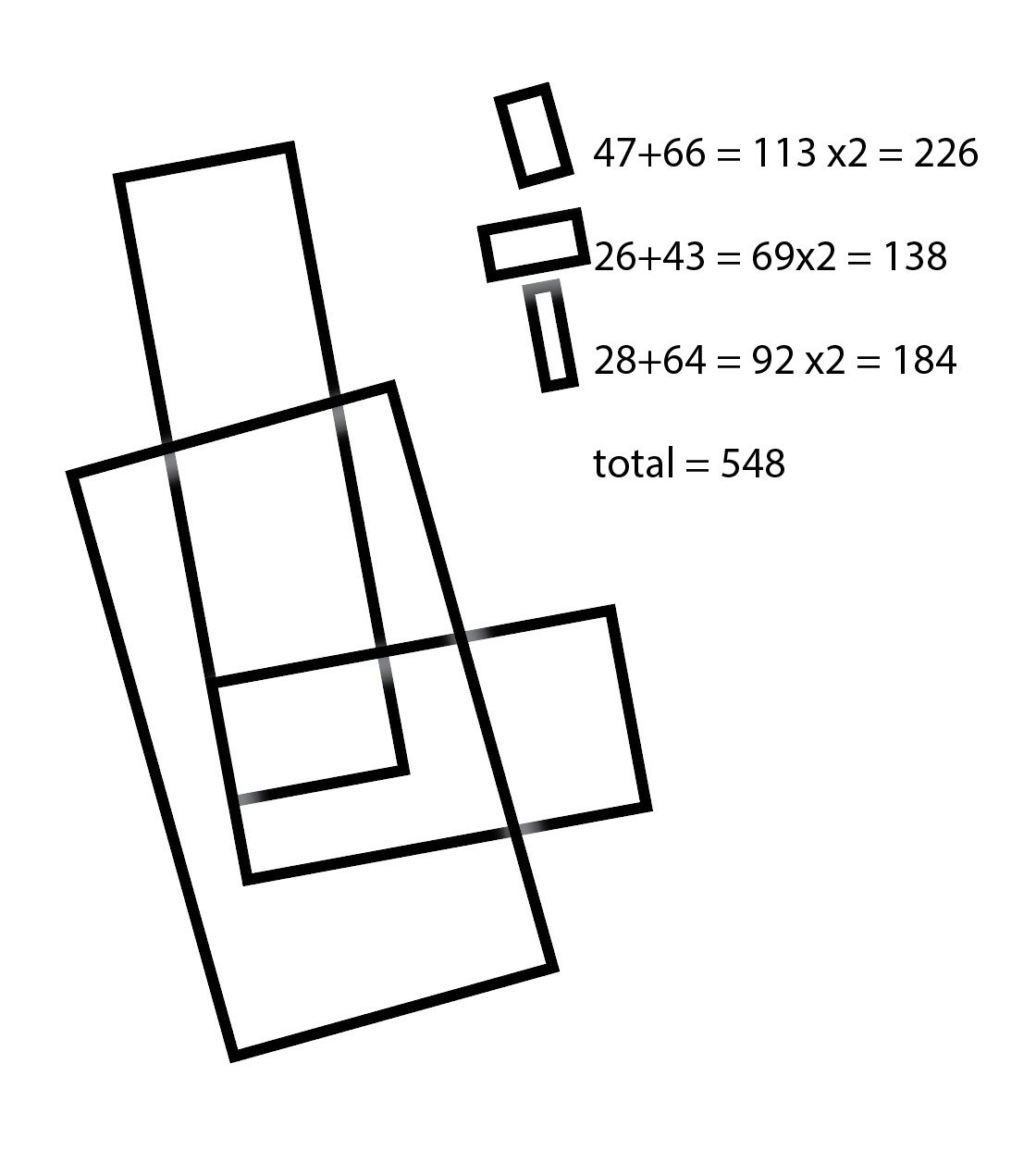

Using prototypes from the user testing session, we described the outcome of the second user test. Scott suggested that, given the very light pressure Ben applies in making a selection, a “leaf switch” might be a good solution. Though we did prototype a “leaf switch” selector for our final presentation, it was too late for us to test it with Ben. Supporting our team’s conclusion, our advisors suggested that we build and finish the rectangular picture button option — both for presentation to our class, and for Ben and his family to use.

12/11/18 Final Presentation

Final Presentation:

https://docs.google.com/presentation/d/1rkg-aKnnx5Zyn5_0QQe81aV9j4RademWq-H13znOvLA/edit?usp=sharing

Final Code for the IR Apple TV selector:

https://drive.google.com/open?id=1FRx76aGJQa9P_gY1CesyBQMglOVqjFSE

Documentation/Instructions for Updating the Selector with New Movie Options:

https://docs.google.com/document/d/1b8xrPbep_4eVlIVKyaw8K4w2gYEx6adLK81PixZggvw/edit?usp=sharing